1гҖҒе®үиЈ…иҰҒжұӮпјҲжҸҗеүҚзЎ®и®Өпјү

еңЁејҖе§Ӣд№ӢеүҚпјҢйғЁзҪІKubernetesйӣҶзҫӨжңәеҷЁйңҖиҰҒж»Ўи¶ід»ҘдёӢеҮ дёӘжқЎд»¶пјҡ

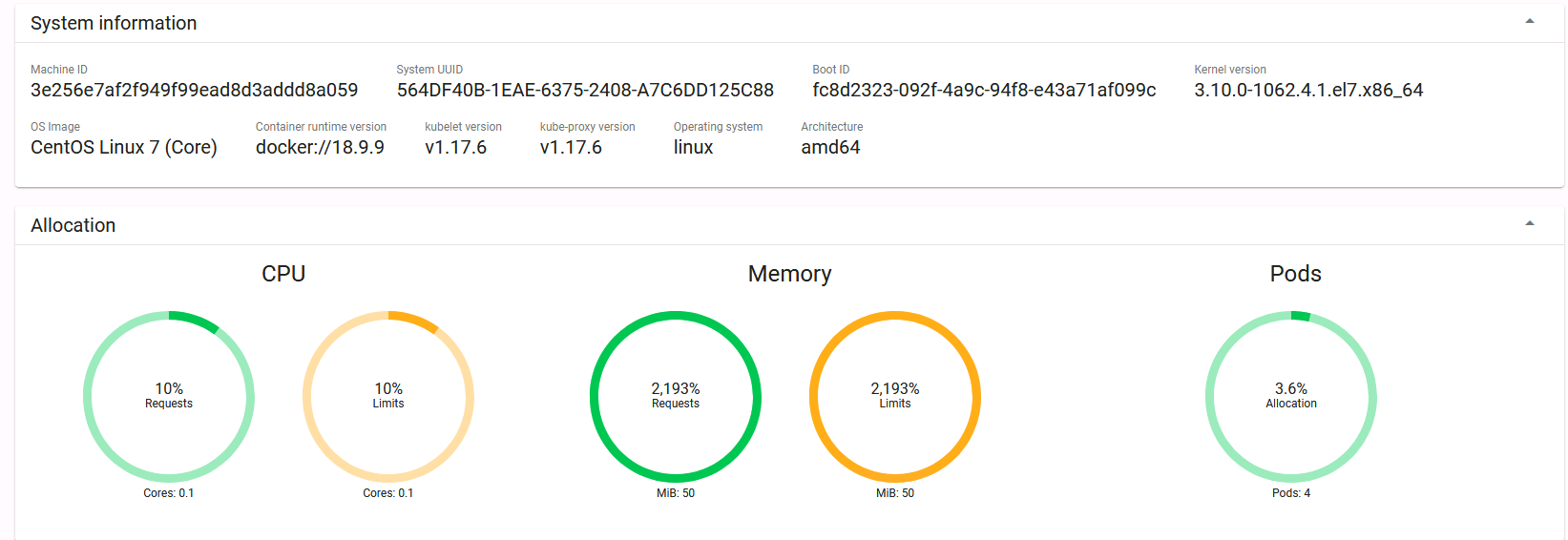

- дёүеҸ°жңәеҷЁпјҢж“ҚдҪңзі»з»ҹ CentOS7.5+пјҲminiпјү

- 硬件й…ҚзҪ®пјҡ2GBRAMпјҢ2дёӘCPUпјҢзЎ¬зӣҳ30GB

2гҖҒе®үиЈ…жӯҘйӘӨ

| и§’иүІ | IP |

|---|---|

master |

192.168.50.128 |

node1 |

192.168.50.131 |

node2 |

192.168.50.132 |

2.1гҖҒе®үиЈ…еүҚйў„еӨ„зҗҶж“ҚдҪң

жіЁж„Ҹжң¬е°ҸиҠӮиҝҷ7дёӘжӯҘйӘӨдёӯпјҢеңЁжүҖжңүзҡ„иҠӮзӮ№пјҲmasterе’ҢnodeиҠӮзӮ№пјүйғҪиҰҒж“ҚдҪңгҖӮ

пјҲ1пјүе…ій—ӯйҳІзҒ«еўҷгҖҒselinux

~]# systemctl disable --now firewalld

~]# setenforce 0

~]# sed -i 's/enforcing/disabled/' /etc/selinux/config

пјҲ3пјүе…ій—ӯswapеҲҶеҢә

~]# swapoff -a

~]# sed -i.bak 's/^.*centos-swap/#&/g' /etc/fstab

дёҠйқўзҡ„жҳҜдёҙж—¶е…ій—ӯпјҢеҪ“然д№ҹеҸҜд»Ҙж°ёд№…е…ій—ӯпјҢеҚіеңЁ/etc/fstabж–Ү件дёӯе°ҶswapжҢӮиҪҪжүҖеңЁзҡ„иЎҢжіЁйҮҠжҺүеҚіеҸҜгҖӮ

пјҲ4пјүи®ҫзҪ®дё»жңәеҗҚ

masterдё»иҠӮзӮ№и®ҫзҪ®еҰӮдёӢ

~]# hostnamectl set-hostname master

node1д»ҺиҠӮзӮ№и®ҫзҪ®еҰӮдёӢ

~]# hostnamectl set-hostname node1

node2д»ҺиҠӮзӮ№и®ҫзҪ®еҰӮдёӢ

~]# hostnamectl set-hostname node2

жү§иЎҢbashе‘Ҫд»Өд»ҘеҠ иҪҪж–°и®ҫзҪ®зҡ„дё»жңәеҗҚ

пјҲ5пјүж·»еҠ hostsи§Јжһҗ

~]# cat >>/etc/hosts <<EOF

192.168.50.128 master

192.168.50.131 node1

192.168.50.132 node2

EOF

пјҲ6пјүжү“ејҖipv6жөҒйҮҸиҪ¬еҸ‘гҖӮ

~]# cat > /etc/sysctl.d/k8s.conf << EOF

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

~]# sysctl --system #з«ӢеҚіз”ҹж•Ҳ

пјҲ7пјүй…ҚзҪ®yumжәҗ

жүҖжңүзҡ„иҠӮзӮ№еқҮйҮҮз”ЁйҳҝйҮҢдә‘е®ҳзҪ‘зҡ„baseе’Ңepelжәҗ

~]# mv /etc/yum.repos.d/* /tmp

~]# curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

~]# curl -o /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

пјҲ8пјүж—¶еҢәдёҺж—¶й—ҙеҗҢжӯҘ

~]# ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

~]# yum install dnf ntpdate -y

~]# dnf makecache

~]# ntpdate ntp.aliyun.com

2.2гҖҒе®үиЈ…docker

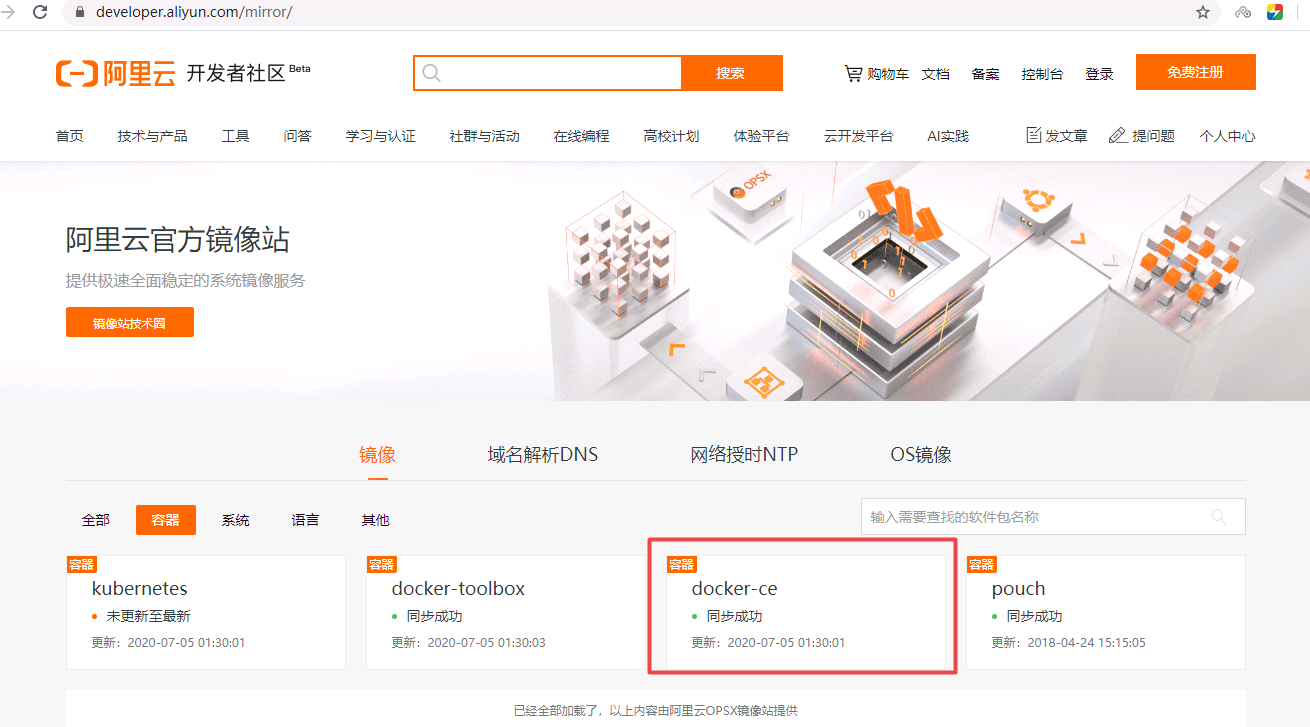

пјҲ1пјүж·»еҠ dockerиҪҜ件yumжәҗ

~]# curl -o /etc/yum.repos.d/docker-ce.repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

~]# cat /etc/yum.repos.d/docker-ce.repo

[docker-ce-stable]

name=Docker CE Stable - $basearch

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/$basearch/stable

enabled=1

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

.......

пјҲ2пјүе®үиЈ…docker-ce

еҲ—еҮәжүҖжңүеҸҜд»Ҙе®үиЈ…зҡ„зүҲжң¬

~]# dnf list docker-ce --showduplicates

docker-ce.x86_64 3:18.09.6-3.el7 docker-ce-stable

docker-ce.x86_64 3:18.09.7-3.el7 docker-ce-stable

docker-ce.x86_64 3:18.09.8-3.el7 docker-ce-stable

docker-ce.x86_64 3:18.09.9-3.el7 docker-ce-stable

docker-ce.x86_64 3:19.03.0-3.el7 docker-ce-stable

docker-ce.x86_64 3:19.03.1-3.el7 docker-ce-stable

docker-ce.x86_64 3:19.03.2-3.el7 docker-ce-stable

docker-ce.x86_64 3:19.03.3-3.el7 docker-ce-stable

docker-ce.x86_64 3:19.03.4-3.el7 docker-ce-stable

docker-ce.x86_64 3:19.03.5-3.el7 docker-ce-stable

.....

иҝҷйҮҢжҲ‘们е®үиЈ…жңҖж–°зүҲжң¬зҡ„dockerпјҢжүҖжңүзҡ„иҠӮзӮ№йғҪйңҖиҰҒе®үиЈ…dockerжңҚеҠЎ

~]# dnf install -y docker-ce docker-ce-cli

пјҲ3пјүеҗҜеҠЁdocker并и®ҫзҪ®ејҖжңәиҮӘеҗҜеҠЁ

~]# systemctl enable --now docker

жҹҘзңӢзүҲжң¬еҸ·пјҢжЈҖжөӢdockerжҳҜеҗҰе®үиЈ…жҲҗеҠҹ

~]# docker --version

Docker version 19.03.12, build 48a66213fea

дёҠйқўзҡ„иҝҷз§ҚжҹҘзңӢdocker clientзҡ„зүҲжң¬зҡ„гҖӮе»әи®®дҪҝз”ЁдёӢйқўиҝҷз§Қж–№жі•жҹҘзңӢdocker-ceзүҲжң¬еҸ·пјҢиҝҷз§Қж–№жі•жҠҠdockerзҡ„clientз«Ҝе’Ңserverз«Ҝзҡ„зүҲжң¬еҸ·жҹҘзңӢзҡ„дёҖжё…дәҢжҘҡгҖӮ

~]# docker version

Client:

Version: 19.03.12

API version: 1.40

Go version: go1.13.10

Git commit: 039a7df9ba

Built: Wed Sep 4 16:51:21 2019

OS/Arch: linux/amd64

Experimental: false

Server: Docker Engine - Community

Engine:

Version: 19.03.12

API version: 1.40 (minimum version 1.12)

Go version: go1.13.10

Git commit: 039a7df

Built: Wed Sep 4 16:22:32 2019

OS/Arch: linux/amd64

Experimental: false

пјҲ4пјүжӣҙжҚўdockerзҡ„й•ңеғҸд»“еә“жәҗ

еӣҪеҶ…й•ңеғҸд»“еә“жәҗжңүеҫҲеӨҡпјҢжҜ”еҰӮйҳҝйҮҢдә‘пјҢжё…еҚҺжәҗпјҢдёӯеӣҪ科жҠҖеӨ§пјҢdockerе®ҳж–№дёӯеӣҪжәҗзӯүзӯүгҖӮ

~]# cat > /etc/docker/daemon.json << EOF

{

"registry-mirrors": ["https://f1bhsuge.mirror.aliyuncs.com"]

}

EOF

з”ұдәҺеҠ иҪҪdockerд»“еә“жәҗпјҢжүҖд»ҘйңҖиҰҒйҮҚеҗҜdocker

~]# systemctl restart docker

2.3гҖҒе®үиЈ…kubernetesжңҚеҠЎ

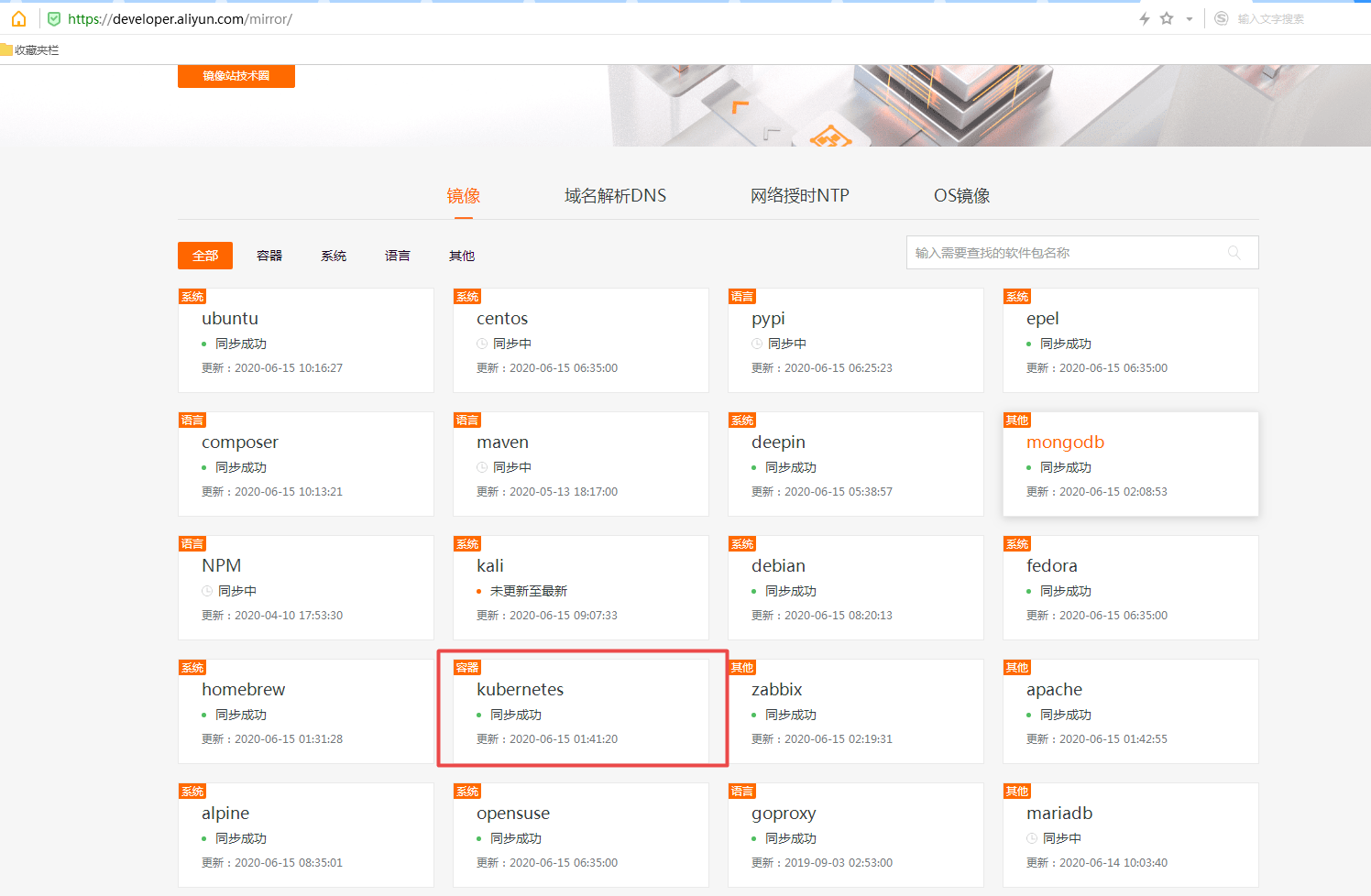

пјҲ1пјүж·»еҠ kubernetesиҪҜ件yumжәҗ

ж–№жі•пјҡжөҸи§ҲеҷЁжү“ејҖmirrors.aliyun.comзҪ‘з«ҷпјҢжүҫеҲ°kubernetesпјҢеҚіеҸҜзңӢеҲ°й•ңеғҸд»“еә“жәҗ

~]# cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

пјҲ2пјүе®үиЈ…kubeadmгҖҒkubeletе’Ңkubectl组件

жүҖжңүзҡ„иҠӮзӮ№йғҪйңҖиҰҒе®үиЈ…иҝҷеҮ дёӘ组件гҖӮ

~]# dnf list kubeadm --showduplicates

kubeadm.x86_64 1.17.7-0 kubernetes

kubeadm.x86_64 1.17.7-1 kubernetes

kubeadm.x86_64 1.17.8-0 kubernetes

kubeadm.x86_64 1.17.9-0 kubernetes

kubeadm.x86_64 1.18.0-0 kubernetes

kubeadm.x86_64 1.18.1-0 kubernetes

kubeadm.x86_64 1.18.2-0 kubernetes

kubeadm.x86_64 1.18.3-0 kubernetes

kubeadm.x86_64 1.18.4-0 kubernetes

kubeadm.x86_64 1.18.4-1 kubernetes

kubeadm.x86_64 1.18.5-0 kubernetes

kubeadm.x86_64 1.18.6-0 kubernetes

з”ұдәҺkubernetesзүҲжң¬еҸҳжӣҙйқһеёёеҝ«пјҢеӣ жӯӨиҝҷйҮҢе…ҲеҲ—еҮәдәҶжңүе“ӘдәӣзүҲжң¬пјҢжҲ‘们е®үиЈ…1.18.6зүҲжң¬гҖӮжүҖжңүиҠӮзӮ№йғҪе®үиЈ…гҖӮ

~]# dnf install -y kubelet-1.18.6 kubeadm-1.18.6 kubectl-1.18.6

пјҲ3пјүи®ҫзҪ®ејҖжңәиҮӘеҗҜеҠЁ

жҲ‘们е…Ҳи®ҫзҪ®ејҖжңәиҮӘеҗҜпјҢдҪҶжҳҜ

kubeleteжңҚеҠЎжҡӮж—¶е…ҲдёҚеҗҜеҠЁгҖӮ

~]# systemctl enable kubelet

2.4гҖҒйғЁзҪІKubeadm MasterиҠӮзӮ№

пјҲ1пјүз”ҹжҲҗйў„еӨ„зҗҶж–Ү件

еңЁmasterиҠӮзӮ№жү§иЎҢеҰӮдёӢжҢҮд»ӨпјҢеҸҜиғҪеҮәзҺ°WARNINGиӯҰе‘ҠпјҢдҪҶжҳҜдёҚеҪұе“ҚйғЁзҪІпјҡ

~]# kubeadm config print init-defaults > kubeadm-init.yaml

иҝҷдёӘж–Ү件kubeadm-init.yamlпјҢжҳҜжҲ‘们еҲқе§ӢеҢ–дҪҝз”Ёзҡ„ж–Ү件пјҢйҮҢйқўеӨ§жҰӮдҝ®ж”№иҝҷеҮ йЎ№еҸӮж•°гҖӮ

[root@master1 ~]# cat kubeadm-init.yaml

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.50.128

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: master1

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers #йҳҝйҮҢдә‘зҡ„й•ңеғҸз«ҷзӮ№

kind: ClusterConfiguration

kubernetesVersion: v1.18.3 #kubernetesзүҲжң¬еҸ·

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12 #йҖүжӢ©й»ҳи®ӨеҚіеҸҜпјҢеҪ“然д№ҹеҸҜд»ҘиҮӘе®ҡд№үCIDR

podSubnet: 10.244.0.0/16 #ж·»еҠ podзҪ‘ж®ө

scheduler: {}

пјҲ2пјүжҸҗеүҚжӢүеҸ–й•ңеғҸ

еҰӮжһңзӣҙжҺҘйҮҮз”Ёkubeadm initжқҘеҲқе§ӢеҢ–пјҢдёӯй—ҙдјҡжңүзі»з»ҹиҮӘеҠЁжӢүеҸ–й•ңеғҸзҡ„иҝҷдёҖжӯҘйӘӨпјҢиҝҷжҳҜжҜ”иҫғж…ўзҡ„пјҢжҲ‘е»әи®®еҲҶејҖжқҘеҒҡпјҢжүҖд»ҘиҝҷйҮҢе°ұе…ҲжҸҗеүҚжӢүеҸ–й•ңеғҸгҖӮеңЁmasterиҠӮзӮ№ж“ҚдҪңеҰӮдёӢжҢҮд»Өпјҡ

[root@master ~]# kubeadm config images pull --config kubeadm-init.yaml

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-apiserver:v1.18.0

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-controller-manager:v1.18.0

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-scheduler:v1.18.0

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-proxy:v1.18.0

[config/images] Pulled registry.aliyuncs.com/google_containers/pause:3.1

[config/images] Pulled registry.aliyuncs.com/google_containers/etcd:3.4.3-0

[config/images] Pulled registry.aliyuncs.com/google_containers/coredns:1.6.5

еҰӮжһңеӨ§е®¶зңӢеҲ°ејҖеӨҙзҡ„дёӨиЎҢwarningдҝЎжҒҜпјҲжҲ‘иҝҷйҮҢжІЎжңүжү“еҚ°пјүпјҢдёҚеҝ…жӢ…еҝғпјҢиҝҷеҸӘжҳҜиӯҰе‘ҠпјҢдёҚеҪұе“ҚжҲ‘们е®ҢжҲҗе®һйӘҢгҖӮ

既然й•ңеғҸе·Із»ҸжӢүеҸ–жҲҗеҠҹдәҶпјҢйӮЈжҲ‘们е°ұеҸҜд»ҘзӣҙжҺҘејҖе§ӢеҲқе§ӢеҢ–дәҶгҖӮ

пјҲ3пјүеҲқе§ӢеҢ–kubenetesзҡ„masterиҠӮзӮ№

жү§иЎҢеҰӮдёӢе‘Ҫд»Өпјҡ

[root@master ~]# kubeadm init --config kubeadm-init.yaml

[init] Using Kubernetes version: v1.18.3

[preflight] Running pre-flight checks

[WARNING Service-Docker]: docker service is not enabled, please run 'systemctl enable docker.service'

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [master kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.50.128]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [master localhost] and IPs [192.168.50.128 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [master localhost] and IPs [192.168.50.128 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

W0629 21:47:51.709568 39444 manifests.go:214] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"

[control-plane] Creating static Pod manifest for "kube-scheduler"

W0629 21:47:51.711376 39444 manifests.go:214] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 14.003225 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.17" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node master as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node master as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: abcdef.0123456789abcdef

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.50.128:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:05b84c41152f72ca33afe39a7ef7fa359eec3d3ed654c2692b665e2c4810af3e

иҝҷдёӘиҝҮзЁӢеӨ§жҰӮ15sзҡ„ж—¶й—ҙе°ұеҒҡе®ҢдәҶпјҢд№ӢжүҖд»ҘеҲқе§ӢеҢ–зҡ„иҝҷд№Ҳеҝ«е°ұжҳҜеӣ дёәжҲ‘们жҸҗеүҚжӢүеҸ–дәҶй•ңеғҸгҖӮ

еғҸжҲ‘дёҠйқўиҝҷж ·зҡ„жІЎжңүжҠҘй”ҷдҝЎжҒҜпјҢ并且жҳҫзӨәжңҖеҗҺзҡ„kubeadm join 192.168.50.128:6443 --token abcdef.0123456789abcdefиҝҷдәӣпјҢиҜҙжҳҺжҲ‘们зҡ„masterжҳҜеҲқе§ӢеҢ–жҲҗеҠҹзҡ„гҖӮ

еҪ“然жҲ‘们иҝҳйңҖиҰҒжҢүз…§жңҖеҗҺзҡ„жҸҗзӨәеңЁдҪҝз”ЁkubernetesйӣҶзҫӨд№ӢеүҚиҝҳйңҖиҰҒеҶҚеҒҡдёҖдёӢ收е°ҫе·ҘдҪңпјҢжіЁж„ҸжҳҜеңЁmasterиҠӮзӮ№дёҠжү§иЎҢзҡ„гҖӮ

[root@master ~]# mkdir -p $HOME/.kube

[root@master ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

еҘҪдәҶпјҢжӯӨж—¶зҡ„masterиҠӮзӮ№е°ұз®—жҳҜеҲқе§ӢеҢ–е®ҢжҜ•дәҶгҖӮжңүдёӘйҮҚиҰҒзҡ„зӮ№е°ұжҳҜжңҖеҗҺдёҖиЎҢдҝЎжҒҜпјҢиҝҷжҳҜnodeиҠӮзӮ№еҠ е…ҘkubernetesйӣҶзҫӨзҡ„и®ӨиҜҒе‘Ҫд»ӨгҖӮиҝҷдёӘеҜҶй’ҘжҳҜзі»з»ҹж №жҚ®sha256з®—жі•и®Ўз®—еҮәжқҘзҡ„пјҢеҝ…йЎ»жҢҒжңүиҝҷж ·зҡ„еҜҶй’ҘжүҚеҸҜд»ҘеҠ е…ҘеҪ“еүҚзҡ„kubernetesйӣҶзҫӨгҖӮ

еҰӮжһңжӯӨж—¶жҹҘзңӢеҪ“еүҚйӣҶзҫӨзҡ„иҠӮзӮ№пјҢдјҡеҸ‘зҺ°еҸӘжңүmasterиҠӮзӮ№иҮӘе·ұгҖӮ

[root@master ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

master NotReady master 2m53s v1.18.6

жҺҘдёӢжқҘжҲ‘们жҠҠnodeиҠӮзӮ№еҠ е…ҘеҲ°kubernetesйӣҶзҫӨдёӯ

2.5гҖҒnodeиҠӮзӮ№еҠ е…ҘkubernetesйӣҶзҫӨдёӯ

е…ҲжҠҠеҠ е…ҘйӣҶзҫӨзҡ„е‘Ҫд»ӨжҳҺзЎ®дёҖдёӢпјҢжӯӨе‘Ҫд»ӨжҳҜmasterиҠӮзӮ№еҲқе§ӢеҢ–жҲҗеҠҹд№ӢеҗҺз»ҷеҮәзҡ„е‘Ҫд»ӨгҖӮ

жіЁж„ҸпјҢдҪ зҡ„еҲқе§ӢеҢ–д№ӢеҗҺдёҺжҲ‘зҡ„еҜҶй’ҘжҢҮд»ӨиӮҜе®ҡжҳҜдёҚдёҖж ·зҡ„пјҢеӣ жӯӨиҰҒз”ЁиҮӘе·ұзҡ„е‘Ҫд»ӨжүҚиЎҢпјҢжҲ‘иҝҷиҫ№жҳҜдёәдәҶз»ҷеӨ§е®¶жј”зӨәжүҚиҙҙеҮәжқҘзҡ„гҖӮ

~]# kubeadm join 192.168.50.128:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:05b84c41152f72ca33afe39a7ef7fa359eec3d3ed654c2692b665e2c4810af3e

пјҲ1пјүnode1иҠӮзӮ№еҠ е…ҘйӣҶзҫӨ

[root@node1 ~]# kubeadm join 192.168.50.128:6443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:05b84c41152f72ca33afe39a7ef7fa359eec3d3ed654c2692b665e2c4810af3e

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.17" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster

еҪ“зңӢеҲ°This node has joined the clusterпјҢиҝҷдёҖиЎҢдҝЎжҒҜиЎЁзӨәnodeиҠӮзӮ№еҠ е…ҘйӣҶзҫӨжҲҗеҠҹпјҢ

пјҲ2пјүnode2иҠӮзӮ№еҠ е…ҘйӣҶзҫӨ

node2иҠӮзӮ№д№ҹжҳҜдҪҝз”ЁеҗҢж ·зҡ„ж–№жі•жқҘжү§иЎҢгҖӮжүҖжңүзҡ„иҠӮзӮ№еҠ е…ҘйӣҶзҫӨд№ӢеҗҺпјҢжӯӨж—¶жҲ‘们еҸҜд»ҘеңЁmasterиҠӮзӮ№жү§иЎҢеҰӮдёӢе‘Ҫд»ӨжҹҘзңӢжӯӨйӣҶзҫӨзҡ„зҺ°жңүиҠӮзӮ№гҖӮ

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady master 2m53s v1.18.6

node1 NotReady 73s v1.18.6

node2 NotReady 7s v1.18.6

еҸҜд»ҘзңӢеҲ°йӣҶзҫӨзҡ„дёүдёӘиҠӮзӮ№йғҪе·Із»ҸеӯҳеңЁпјҢдҪҶжҳҜзҺ°еңЁиҝҳдёҚиғҪз”ЁпјҢд№ҹе°ұжҳҜиҜҙйӣҶзҫӨиҠӮзӮ№жҳҜдёҚеҸҜз”Ёзҡ„пјҢеҺҹеӣ еңЁдәҺдёҠйқўзҡ„第2дёӘеӯ—ж®өпјҢжҲ‘们зңӢеҲ°дёүдёӘиҠӮзӮ№йғҪжҳҜNotReadyзҠ¶жҖҒпјҢиҝҷжҳҜеӣ дёәжҲ‘们иҝҳжІЎжңүе®үиЈ…зҪ‘з»ңжҸ’件пјҢиҝҷйҮҢжҲ‘们йҖүжӢ©дҪҝз”ЁflannelжҸ’件гҖӮ

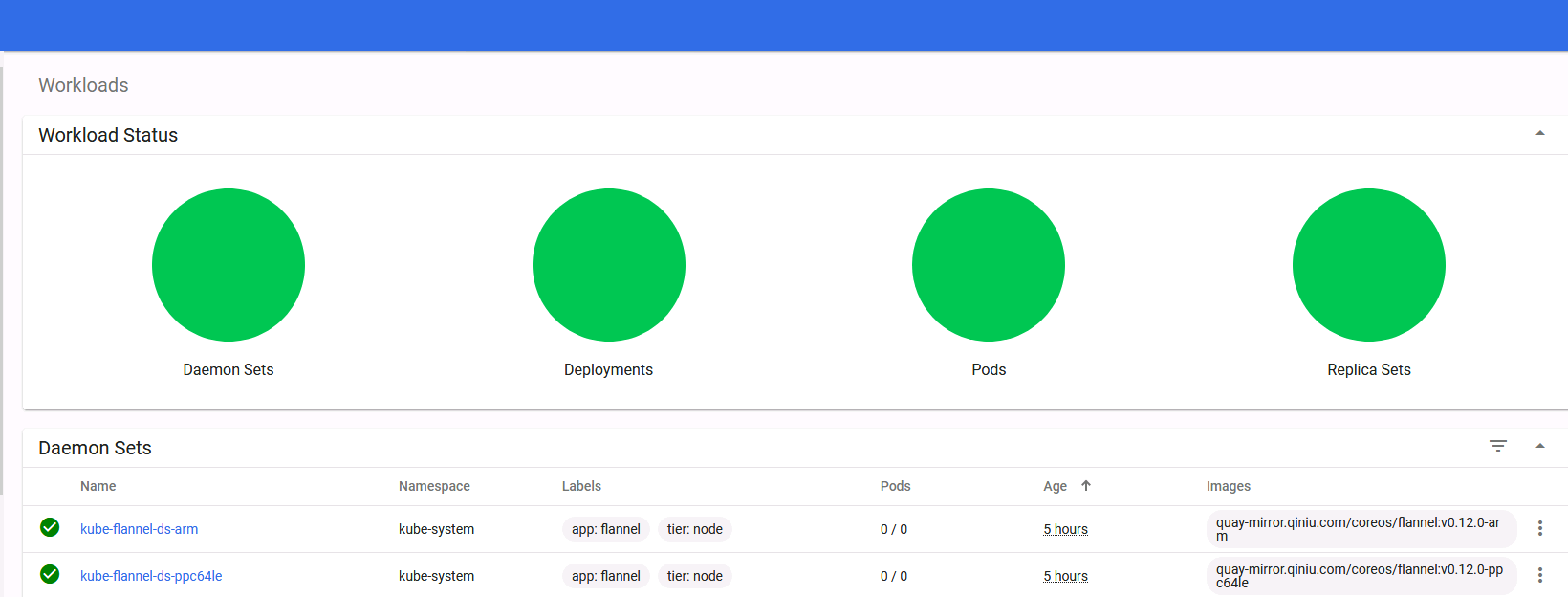

2.6гҖҒе®үиЈ…FlannelзҪ‘з»ңжҸ’件

FlannelжҳҜ CoreOS еӣўйҳҹй’ҲеҜ№ Kubernetes и®ҫи®Ўзҡ„дёҖдёӘиҰҶзӣ–зҪ‘з»ңпјҲOverlay Networkпјүе·Ҙе…·пјҢе…¶зӣ®зҡ„еңЁдәҺеё®еҠ©жҜҸдёҖдёӘдҪҝз”Ё Kuberentes зҡ„ CoreOS дё»жңәжӢҘжңүдёҖдёӘе®Ңж•ҙзҡ„еӯҗзҪ‘гҖӮиҝҷж¬Ўзҡ„еҲҶдә«еҶ…е®№е°Ҷд»ҺFlannelзҡ„д»Ӣз»ҚгҖҒе·ҘдҪңеҺҹзҗҶеҸҠе®үиЈ…е’Ңй…ҚзҪ®дёүж–№йқўжқҘд»Ӣз»ҚиҝҷдёӘе·Ҙе…·зҡ„дҪҝз”Ёж–№жі•гҖӮ

FlannelйҖҡиҝҮз»ҷжҜҸеҸ°е®ҝдё»жңәеҲҶй…ҚдёҖдёӘеӯҗзҪ‘зҡ„ж–№ејҸдёәе®№еҷЁжҸҗдҫӣиҷҡжӢҹзҪ‘з»ңпјҢе®ғеҹәдәҺLinux TUN/TAPпјҢдҪҝз”ЁUDPе°ҒиЈ…IPеҢ…жқҘеҲӣе»әoverlayзҪ‘з»ңпјҢ并еҖҹеҠ©etcdз»ҙжҠӨзҪ‘з»ңзҡ„еҲҶй…Қжғ…еҶө

пјҲ1пјүй»ҳи®Өж–№жі•

й»ҳи®ӨеӨ§е®¶д»ҺзҪ‘дёҠзҡ„ж•ҷзЁӢйғҪдјҡдҪҝз”ЁиҝҷдёӘе‘Ҫд»ӨжқҘеҲқе§ӢеҢ–гҖӮ

~]# kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

дәӢе®һдёҠеҫҲеӨҡз”ЁжҲ·йғҪдёҚиғҪжҲҗеҠҹпјҢеӣ дёәеӣҪеҶ…зҪ‘з»ңеҸ—йҷҗпјҢжүҖд»ҘеҸҜд»Ҙиҝҷж ·еӯҗжқҘеҒҡгҖӮ

пјҲ2пјүжӣҙжҚўflannelй•ңеғҸжәҗ

дҝ®ж”№жң¬ең°зҡ„hostsж–Ү件添еҠ еҰӮдёӢеҶ…е®№д»Ҙдҫҝи§ЈжһҗжүҚиғҪдёӢиҪҪиҜҘж–Ү件

199.232.28.133 raw.githubusercontent.com

然еҗҺдёӢиҪҪflannelж–Ү件

[root@master ~]# curl -o kube-flannel.yml https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

зј–иҫ‘й•ңеғҸжәҗпјҢй»ҳи®Өзҡ„й•ңеғҸең°еқҖжҲ‘们дҝ®ж”№дёҖдёӢгҖӮжҠҠyamlж–Ү件дёӯжүҖжңүзҡ„quay.io дҝ®ж”№дёәquay-mirror.qiniu.com

[root@master ~]# sed -i 's/quay.io/quay-mirror.qiniu.com/g' kube-flannel.yml

жӯӨж—¶дҝқеӯҳдҝқеӯҳйҖҖеҮәгҖӮеңЁmasterиҠӮзӮ№жү§иЎҢжӯӨе‘Ҫд»ӨгҖӮ

[root@master ~]# kubectl apply -f kube-flannel.yml

podsecuritypolicy.policy/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds-amd64 created

daemonset.apps/kube-flannel-ds-arm64 created

daemonset.apps/kube-flannel-ds-arm created

daemonset.apps/kube-flannel-ds-ppc64le created

daemonset.apps/kube-flannel-ds-s390x created

иҝҷж ·еӯҗе°ұеҸҜд»ҘжҲҗеҠҹжӢүеҸ–flannelй•ңеғҸдәҶгҖӮеҪ“然дҪ д№ҹеҸҜд»ҘдҪҝз”ЁжҲ‘жҸҗдҫӣз»ҷеӨ§е®¶зҡ„kube-flannel.ymlж–Ү件гҖӮ

- жҹҘзңӢ

kube-flannelзҡ„podжҳҜеҗҰиҝҗиЎҢжӯЈеёё

[root@master ~]# kubectl get pod -n kube-system | grep kube-flannel

kube-flannel-ds-amd64-8svs6 1/1 Running 0 44s

kube-flannel-ds-amd64-k5k4k 0/1 Running 0 44s

kube-flannel-ds-amd64-mwbwp 0/1 Running 0 44s

пјҲ3пјүж— жі•жӢүеҸ–й•ңеғҸи§ЈеҶіж–№жі•

еғҸдёҠйқўжҹҘзңӢkube-flannelзҡ„podж—¶еҸ‘зҺ°дёҚжҳҜRunningпјҢиҝҷе°ұиЎЁзӨәиҜҘpodжңүй—®йўҳпјҢжҲ‘们йңҖиҰҒиҝӣдёҖжӯҘеҲҶжһҗгҖӮ

жү§иЎҢkubectl describe pod xxxxеҰӮжһңжңүд»ҘдёӢжҠҘй”ҷпјҡ

Normal BackOff 24m (x6 over 26m) kubelet, master3 Back-off pulling image "quay-mirror.qiniu.com/coreos/flannel:v0.12.0-amd64"

Warning Failed 11m (x64 over 26m) kubelet, master3 Error: ImagePullBackOff

жҲ–иҖ…жҳҜ

Error response from daemon: Get https://quay.io/v2/: net/http: TLS handshake timeout

дёҠйқўзҡ„иҝҷдәӣйғҪиЎЁзӨәжҳҜзҪ‘з»ңй—®йўҳдёҚиғҪжӢүеҸ–й•ңеғҸпјҢжҲ‘иҝҷйҮҢз»ҷеӨ§е®¶жҸҗеүҚеҮҶеӨҮдәҶflannelзҡ„й•ңеғҸгҖӮеҜје…ҘдёҖдёӢе°ұеҸҜд»ҘдәҶгҖӮ

[root@master ~]# docker load -i flannel.tar

2.7гҖҒйӘҢиҜҒиҠӮзӮ№жҳҜеҗҰеҸҜз”Ё

зЁҚзӯүзүҮеҲ»пјҢжү§иЎҢеҰӮдёӢжҢҮд»ӨжҹҘзңӢиҠӮзӮ№жҳҜеҗҰеҸҜз”Ё

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 82m v1.17.6

node1 Ready 60m v1.17.6

node2 Ready 55m v1.17.6

зӣ®еүҚиҠӮзӮ№зҠ¶жҖҒжҳҜReadyпјҢиЎЁзӨәйӣҶзҫӨиҠӮзӮ№зҺ°еңЁжҳҜеҸҜз”Ёзҡ„гҖӮ

3гҖҒжөӢиҜ•kubernetesйӣҶзҫӨ

3.1гҖҒkubernetesйӣҶзҫӨжөӢиҜ•

пјҲ1пјүеҲӣе»әдёҖдёӘnginxзҡ„pod

зҺ°еңЁжҲ‘们еңЁkubernetesйӣҶзҫӨдёӯеҲӣе»әдёҖдёӘnginxзҡ„podпјҢйӘҢиҜҒжҳҜеҗҰиғҪжӯЈеёёиҝҗиЎҢгҖӮ

еңЁmasterиҠӮзӮ№жү§иЎҢдёҖдёӢжӯҘйӘӨпјҡ

[root@master ~]# kubectl create deployment nginx --image=nginx

deployment.apps/nginx created

[root@master ~]# kubectl expose deployment nginx --port=80 --type=NodePort

service/nginx exposed

зҺ°еңЁжҲ‘们жҹҘзңӢpodе’Ңservice

[root@master ~]# kubectl get pod,svc -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/nginx-86c57db685-kk755 1/1 Running 0 29m 10.244.1.10 node1

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 10.96.0.1 443/TCP 24h

service/nginx NodePort 10.96.5.205 80:32627/TCP 29m app=nginx

жү“еҚ°зҡ„з»“жһңдёӯпјҢеүҚеҚҠйғЁеҲҶжҳҜpodзӣёе…ідҝЎжҒҜпјҢеҗҺеҚҠйғЁеҲҶжҳҜserviceзӣёе…ідҝЎжҒҜгҖӮжҲ‘们зңӢservice/nginxиҝҷдёҖиЎҢеҸҜд»ҘзңӢеҮәserviceжҡҙжјҸз»ҷйӣҶзҫӨзҡ„з«ҜеҸЈжҳҜ32627гҖӮи®°дҪҸиҝҷдёӘз«ҜеҸЈгҖӮ

然еҗҺд»Һpodзҡ„иҜҰз»ҶдҝЎжҒҜеҸҜд»ҘзңӢеҮәжӯӨж—¶podеңЁnode1иҠӮзӮ№д№ӢдёҠгҖӮnode1иҠӮзӮ№зҡ„IPең°еқҖжҳҜ192.168.50.129

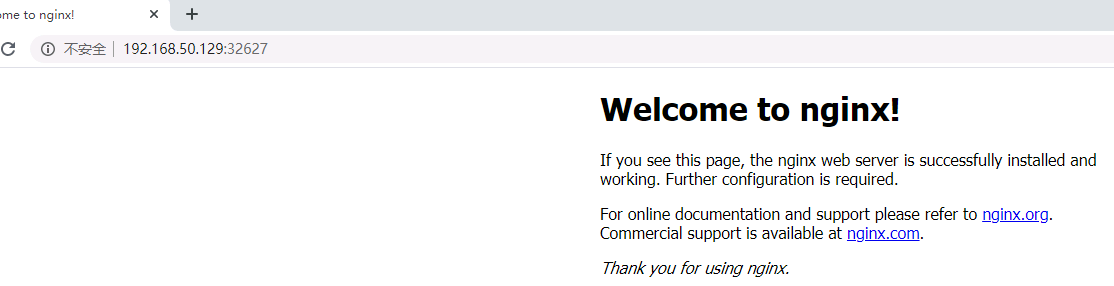

пјҲ2пјүи®ҝй—®nginxйӘҢиҜҒйӣҶзҫӨ

йӮЈзҺ°еңЁжҲ‘们и®ҝй—®дёҖдёӢгҖӮжү“ејҖжөҸи§ҲеҷЁ(е»әи®®зҒ«зӢҗжөҸи§ҲеҷЁ)пјҢи®ҝй—®ең°еқҖе°ұжҳҜпјҡhttp://192.168.50.129:32627

3.2гҖҒе®үиЈ…dashboard

пјҲ1пјүеҲӣе»әdashboard

е…ҲжҠҠdashboardзҡ„й…ҚзҪ®ж–Ү件дёӢиҪҪдёӢжқҘгҖӮз”ұдәҺжҲ‘们д№ӢеүҚе·Із»Ҹж·»еҠ дәҶhostsи§ЈжһҗпјҢеӣ жӯӨеҸҜд»ҘдёӢиҪҪгҖӮ

~]# curl -o recommended.yaml https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-beta8/aio/deploy/recommended.yaml

й»ҳи®ӨDashboardеҸӘиғҪйӣҶзҫӨеҶ…йғЁи®ҝй—®пјҢдҝ®ж”№ServiceдёәNodePortзұ»еһӢпјҢжҡҙйңІеҲ°еӨ–йғЁпјҡ

еӨ§жҰӮеңЁжӯӨж–Ү件зҡ„32-44иЎҢд№Ӣй—ҙпјҢдҝ®ж”№дёәеҰӮдёӢпјҡ

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

type: NodePort #еҠ дёҠжӯӨиЎҢ

ports:

- port: 443

targetPort: 8443

nodePort: 30001 #еҠ дёҠжӯӨиЎҢпјҢз«ҜеҸЈ30001еҸҜд»ҘиҮӘиЎҢе®ҡд№ү

selector:

k8s-app: kubernetes-dashboard

- иҝҗиЎҢжӯӨ

yamlж–Ү件

[root@master ~]# kubectl apply -f recommended.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

...

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

- жҹҘзңӢ

dashboardиҝҗиЎҢжҳҜеҗҰжӯЈеёё

[root@master ~]# kubectl get pod,svc -n kubernetes-dashboard -o wide

NAME READY STATUS RESTARTS AGE IP NODE

pod/dashboard-metrics-scraper-76585494d8-vd9w6 1/1 Running 0 4h50m 10.244.2.3 node2

pod/kubernetes-dashboard-594b99b6f4-72zxw 1/1 Running 0 4h50m 10.244.2.2 node2

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/dashboard-metrics-scraper ClusterIP 10.96.45.110 8000/TCP 4h50m k8s-app=dashboard-metrics-scraper

service/kubernetes-dashboard NodePort 10.96.217.29 443:30001/TCP 4h50m k8s-app=kubernetes-dashboard

д»ҺдёҠйқўеҸҜд»ҘзңӢеҮәпјҢkubernetes-dashboard-594b99b6f4-72zxwиҝҗиЎҢжүҖеңЁзҡ„иҠӮзӮ№жҳҜnode2дёҠйқўпјҢ并且жҡҙжјҸеҮәжқҘзҡ„з«ҜеҸЈжҳҜ30001пјҢжүҖд»Ҙи®ҝй—®ең°еқҖжҳҜпјҡ https://192.168.50.130:30001

- жөҸи§ҲеҷЁи®ҝй—®

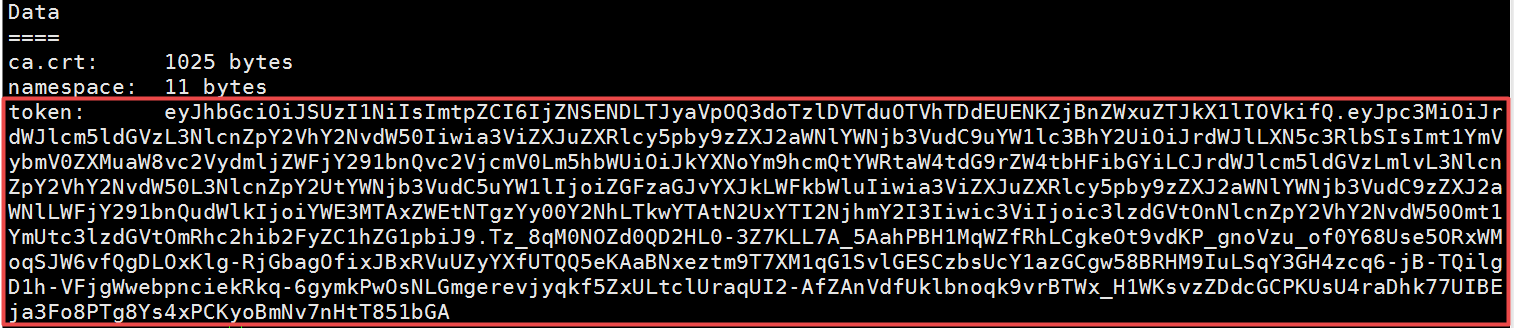

и®ҝй—®зҡ„ж—¶еҖҷдјҡи®©иҫ“е…ҘtokenпјҢд»ҺжӯӨеӨ„еҸҜд»ҘжҹҘзңӢеҲ°tokenзҡ„еҖјгҖӮ

~]# kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')

жҠҠдёҠйқўзҡ„tokenеҖјиҫ“е…ҘиҝӣеҺ»еҚіеҸҜиҝӣеҺ»dashboardз•ҢйқўгҖӮ

дёҚиҝҮзҺ°еңЁжҲ‘们иҷҪ然еҸҜд»Ҙзҷ»йҷҶдёҠеҺ»пјҢдҪҶжҳҜжҲ‘们жқғйҷҗдёҚеӨҹиҝҳжҹҘзңӢдёҚдәҶйӣҶзҫӨдҝЎжҒҜпјҢеӣ дёәжҲ‘们иҝҳжІЎжңүз»‘е®ҡйӣҶзҫӨи§’иүІпјҢеҗҢеӯҰ们еҸҜд»Ҙе…ҲжҢүз…§дёҠйқўзҡ„е°қиҜ•дёҖдёӢпјҢеҶҚжқҘеҒҡдёӢйқўзҡ„жӯҘйӘӨ

пјҲ2пјүcluster-adminз®ЎзҗҶе‘ҳи§’иүІз»‘е®ҡ

~]# kubectl create serviceaccount dashboard-admin -n kube-system

~]# kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

~]# kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')

еҶҚдҪҝз”Ёиҫ“еҮәзҡ„tokenзҷ»йҷҶdashboardеҚіеҸҜгҖӮ

4гҖҒйӣҶзҫӨжҠҘй”ҷжҖ»з»“

пјҲ1пјүжӢүеҸ–й•ңеғҸжҠҘй”ҷжІЎжңүжүҫеҲ°

[root@master ~]# kubeadm config images pull --config kubeadm-init.yaml

W0801 11:00:00.705044 2780 configset.go:202] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]

failed to pull image "registry.aliyuncs.com/google_containers/kube-apiserver:v1.18.4": output: Error response from daemon: manifest for registry.aliyuncs.com/google_containers/kube-apiserver:v1.18.4 not found: manifest unknown: manifest unknown

, error: exit status 1

To see the stack trace of this error execute with --v=5 or higher

йҖүжӢ©жӢүеҸ–зҡ„kubernetesй•ңеғҸзүҲжң¬иҝҮй«ҳпјҢеӣ жӯӨйңҖиҰҒйҷҚдҪҺдёҖдәӣпјҢдҝ®ж”№kubeadm-init.yamlдёӯзҡ„kubernetesVersionеҚіеҸҜгҖӮ

пјҲ2пјүdockerеӯҳеӮЁй©ұеҠЁжҠҘй”ҷ

еңЁе®үиЈ…kubernetesзҡ„иҝҮзЁӢдёӯпјҢз»ҸеёёдјҡйҒҮи§ҒеҰӮдёӢй”ҷиҜҜ

failed to create kubelet: misconfiguration: kubelet cgroup driver: "cgroupfs" is different from docker cgroup driver: "systemd"

еҺҹеӣ жҳҜdockerзҡ„Cgroup Driverе’Ңkubeletзҡ„Cgroup DriverдёҚдёҖиҮҙгҖӮ

1гҖҒдҝ®ж”№dockerзҡ„Cgroup Driver

дҝ®ж”№/etc/docker/daemon.jsonж–Ү件

{

"exec-opts": ["native.cgroupdriver=systemd"]

}

йҮҚеҗҜdockerеҚіеҸҜ

systemctl daemon-reload

systemctl restart docker

пјҲ3пјүnodeиҠӮзӮ№жҠҘlocalhost:8080жӢ’з»қй”ҷиҜҜ

nodeиҠӮзӮ№жү§иЎҢkubectl get podжҠҘй”ҷеҰӮдёӢпјҡ

[root@node1 ~]# kubectl get pod

The connection to the server localhost:8080 was refused - did you specify the right host or port?

еҮәзҺ°иҝҷдёӘй—®йўҳзҡ„еҺҹеӣ жҳҜkubectlе‘Ҫд»ӨйңҖиҰҒдҪҝз”Ёkubernetes-adminеҜҶй’ҘжқҘиҝҗиЎҢ

и§ЈеҶіж–№жі•пјҡ

еңЁmasterиҠӮзӮ№дёҠе°Ҷ/etc/kubernetes/admin.confж–Ү件иҝңзЁӢеӨҚеҲ¶еҲ°nodeиҠӮзӮ№зҡ„/etc/kubernetesзӣ®еҪ•дёӢпјҢ然еҗҺеңЁnodeиҠӮзӮ№й…ҚзҪ®дёҖдёӢзҺҜеўғеҸҳйҮҸ

[root@node1 images]# echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile

[root@node1 images]# source ~/.bash_profile

nodeиҠӮзӮ№еҶҚж¬Ўжү§иЎҢkubectl get podпјҡ

[root@node1 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-f89759699-z4fc2 1/1 Running 0 20m

пјҲ4пјүnodeиҠӮзӮ№еҠ е…ҘйӣҶзҫӨиә«д»ҪйӘҢиҜҒжҠҘй”ҷ

[root@node1 ~]# kubeadm join 192.168.50.128:6443 --token abcdef.0123456789abcdef \

> --discovery-token-ca-cert-hash sha256:05b84c41152f72ca33afe39a7ef7fa359eec3d3ed654c2692b665e2c4810af3e

W0801 11:06:05.871557 2864 join.go:346] [preflight] WARNING: JoinControlPane.controlPlane settings will be ignored when control-plane flag is not set.

[preflight] Running pre-flight checks

error execution phase preflight: couldn't validate the identity of the API Server: cluster CA found in cluster-info ConfigMap is invalid: none of the public keys "sha256:a74a8f5a2690aa46bd2cd08af22276c08a0ed9489b100c0feb0409e1f61dc6d0" are pinned

To see the stack trace of this error execute with --v=5 or higher

еҜҶй’ҘеӨҚеҲ¶зҡ„дёҚеҜ№пјҢйҮҚж–°жҠҠmasterеҲқе§ӢеҢ–д№ӢеҗҺзҡ„еҠ е…ҘйӣҶзҫӨжҢҮд»ӨеӨҚеҲ¶дёҖдёӢпјҢ

пјҲ5пјүеҲқе§ӢеҢ–masterиҠӮзӮ№ж—¶пјҢswapжңӘе…ій—ӯ

[ERROR Swap]пјҡrunning with swap on is not supported please diable swap

е…ій—ӯswapеҲҶеҢәеҚіеҸҜгҖӮ

swapoff -a

sed -i.bak 's/^.*centos-swap/#&/g' /etc/fstab

пјҲ6пјүжү§иЎҢkubectl get csжҳҫзӨә组件еӨ„дәҺйқһеҒҘеә·зҠ¶жҖҒ

[root@master ~]# kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Unhealthy Get http://127.0.0.1:10251/healthz: dial tcp 127.0.0.1:10251: connect: connection refused

controller-manager Unhealthy Get http://127.0.0.1:10252/healthz: dial tcp 127.0.0.1:10252: connect: connection refused

etcd-0 Healthy {"health":"true"}

дҝ®ж”№schedulerе’Ңcontroller-managerдёӨдёӘ组件зҡ„й…ҚзҪ®ж–Ү件пјҢеҲҶеҲ«е°Ҷ--port=0еҺ»жҺүгҖӮй…ҚзҪ®ж–Ү件зҡ„и·Ҝеҫ„жҳҜ/etc/kubernetes/manifests/пјҢдёӢйқўжңүkube-controller-manager.yamlе’Ңkube-scheduler.yamlдёӨдёӘй…ҚзҪ®ж–Ү件гҖӮ

дҝ®ж”№еҘҪд№ӢеҗҺдҝқеӯҳдёҖдёӢеҚіеҸҜпјҢдёҚйңҖиҰҒжүӢеҠЁйҮҚеҗҜжңҚеҠЎгҖӮзӯүдёӘеҚҠеҲҶй’ҹйӣҶзҫӨиҮӘеҠЁе°ұжҒўеӨҚжӯЈеёёпјҢеҶҚж¬Ўжү§иЎҢkubectl get csе‘Ҫд»Өе°ұеҸҜд»ҘзңӢеҲ°з»„件жҳҜжӯЈеёёзҡ„дәҶгҖӮ

пјҲ7пјүdashboardжҠҘй”ҷпјҡGet [https://10.96.0.1:443/version](https://10.96.0.1/version): dial tcp 10.96.0.1:443: i/o timeout

еҮәзҺ°иҝҷдёӘй—®йўҳе®һйҷ…дёҠиҝҳжҳҜйӣҶзҫӨзҪ‘з»ңеӯҳеңЁй—®йўҳпјҢдҪҶжҳҜеҰӮжһңдҪ жҹҘзңӢиҠӮзӮ№жҲ–иҖ…flannelзҡ„podзӯүзӯүжҳҜжӯЈеёёзҡ„пјҢжүҖд»ҘиҝҳжҳҜжҺ’жҹҘдёҚеҮәжқҘй—®йўҳзҡ„гҖӮжңҖеҝ«зҡ„и§ЈеҶіж–№жі•и®©dashboardи°ғеәҰеҲ°masterиҠӮзӮ№дёҠе°ұеҸҜд»ҘдәҶгҖӮ

дҝ®ж”№dashboardзҡ„й…ҚзҪ®ж–Ү件пјҢе°ҶдёӢйқўеҮ иЎҢжіЁйҮҠжҺүпјҲеӨ§зәҰеңЁ232-234иЎҢпјү

nodeSelector:

"beta.kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

# tolerations:

# - key: node-role.kubernetes.io/master

# effect: NoSchedule

д№ҹе°ұжҳҜе°ҶдёҠйқўзҡ„жңҖеҗҺдёүиЎҢжіЁйҮҠжҺүгҖӮ

жҺҘзқҖжҳҜеҶҚеўһеҠ йҖүдёӯзҡ„иҠӮзӮ№

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

nodeName: master

containers:

- name: kubernetes-dashboard

image: kubernetesui/dashboard:v2.0.0-beta8

imagePullPolicy: Always

ports:

еӨ§зәҰеңЁз¬¬190иЎҢпјҢеўһеҠ дёҖиЎҢдҝЎжҒҜnodeName: master

дҝқеӯҳеҘҪд№ӢеҗҺйҮҚж–°жү§иЎҢkubectl applyе‘Ҫд»Өз”іиҜ·еҠ е…ҘйӣҶзҫӨеҚіеҸҜгҖӮ

еҰӮжһңжғіиҮӘе·ұ继з»ӯз ”з©¶зҡ„иҜқпјҢеӨҡзңӢзңӢжҳҜдёҚжҳҜflannelзҡ„зҪ‘ж®өе®ҡд№үзҡ„й—®йўҳгҖӮ

5гҖҒеҸӮиҖғ

дёӘдәәеҸӮиҖғзҡ„дёҖдәӣеҚҡе®ўпјҢеңЁжӯӨи®°еҪ•дёҖдёӢпјҡhttps://www.cnblogs.com/FengGeBlog/p/10810632.html

жң¬ж–Үй“ҫжҺҘпјҡhttps://www.yunweipai.com/38516.html

зҪ‘еҸӢиҜ„и®әcomments